Using LLM as an Assistant in GIE¶

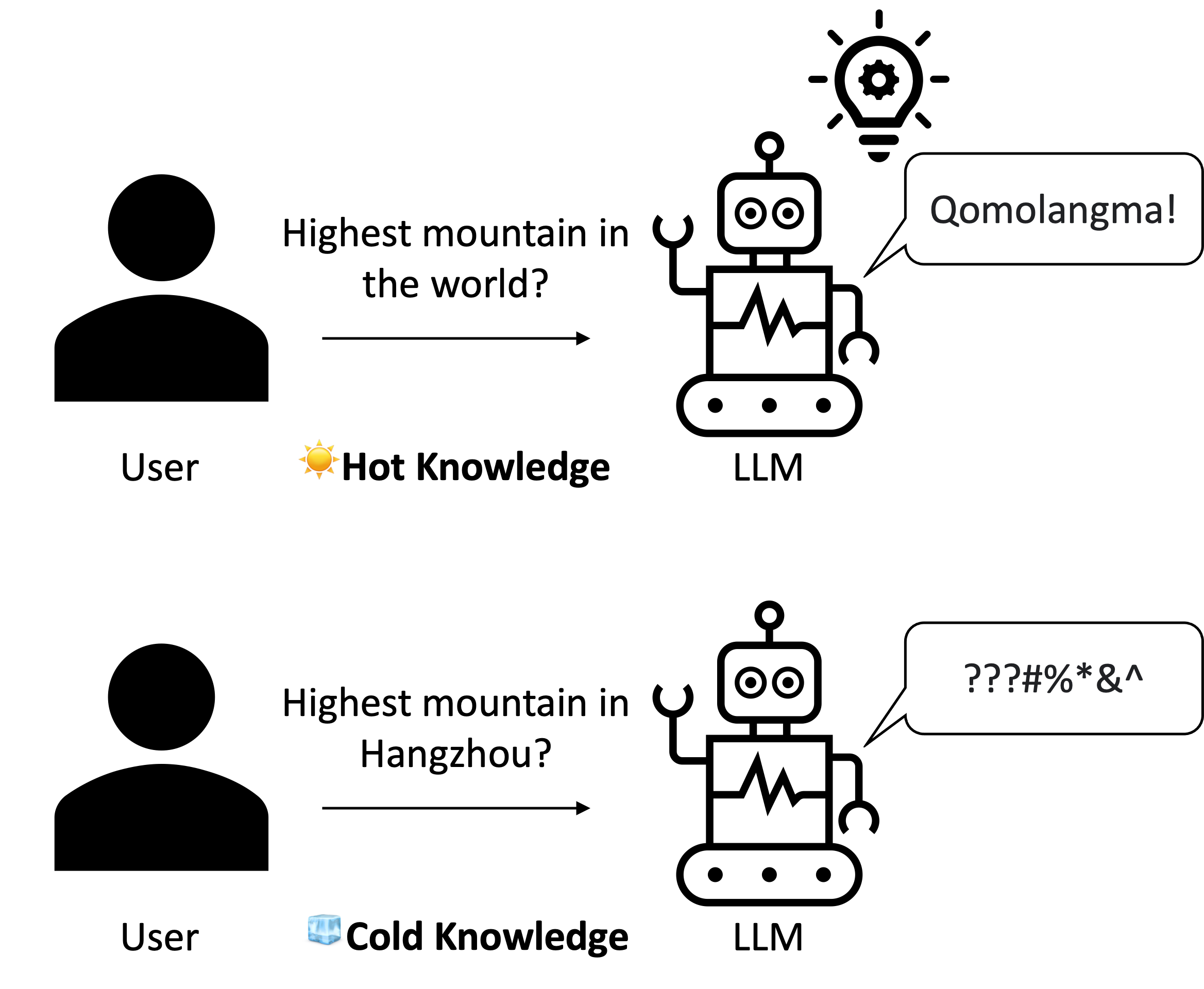

Nowadays, people are more likely to turn to LLM to answer their questions instead of relying on traditional search engines, thanks to LLM’s convenience. However, relying solely on LLM for question answering has its shortcomings. Most LLMs, represented by GPTs, have knowledge limited to their training materials, which are usually up to two years old. Additionally, they lack the ability to access the Internet to retrieve the latest information. Therefore, it is quite common for LLMs to provide misleading answers in less well-known areas because they are also less trained in such domains.

Figure 1. LLM’s different responses to hot and cold knowledge¶

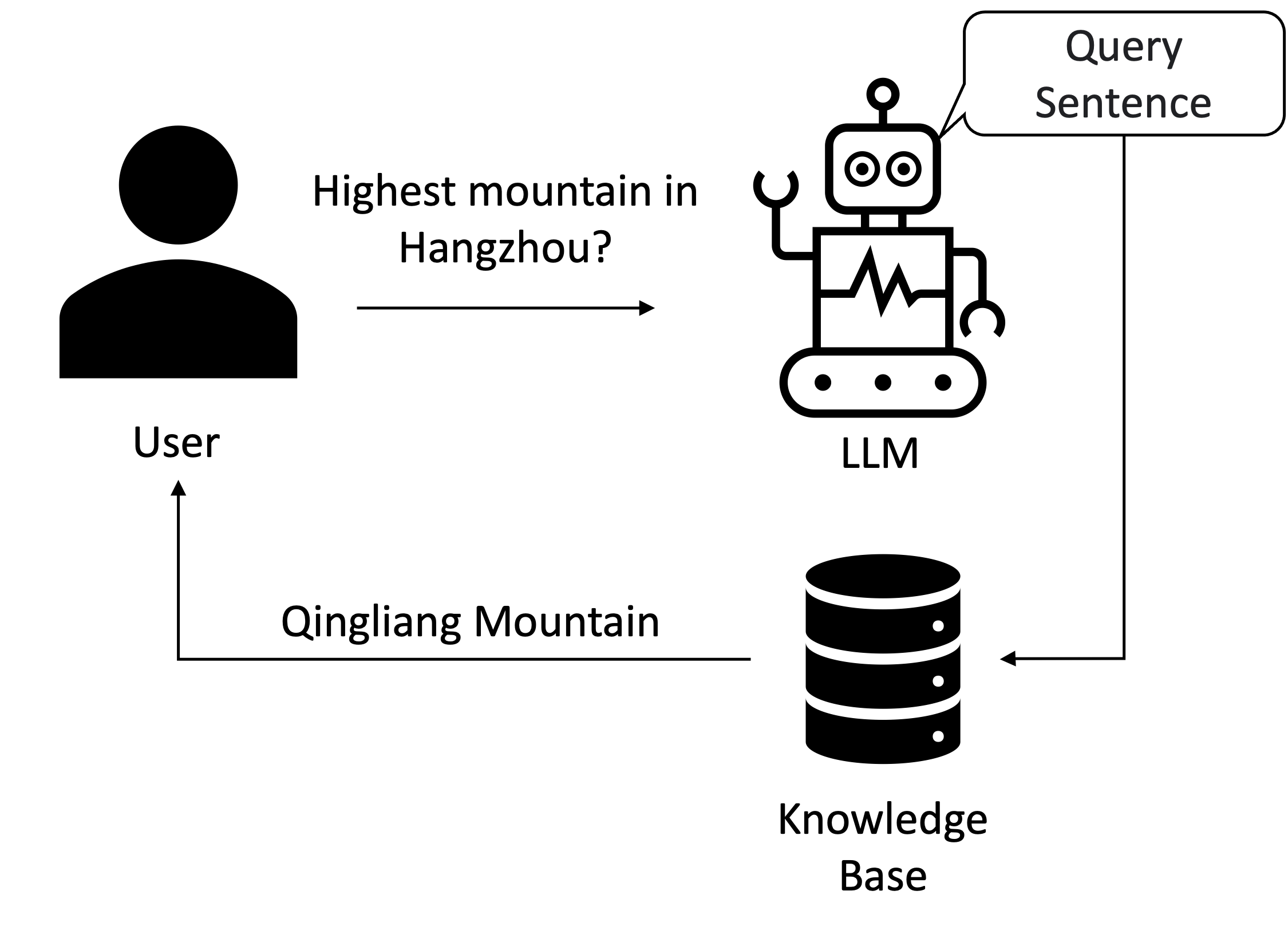

In fact, knowledge in less well-known areas can be organized within a knowledge base, such as an RDBMS or graphs. LLMs can serve as assistants, efficiently helping users retrieve the required information from these knowledge bases by directly translating the user’s question into a workable query sentence. This approach can significantly reduce the occurrence of misleading information generated by LLMs in less well-known areas.

Figure 2. Using LLM as an assistant to help retrieve information from Knowledge Base¶

Following this pattern, we integrate GPTs into GIE as an assistant using OpenAI’s API. Now, even if you are a complete novice when it comes to graphs, with the assistance of LLMs, you can retrieve the information you need from the graph conveniently. This document will use the graph of Dream of the Red Chamber as an example to guide you through how to use LLM as an Assistant in GIE.

0. Environment¶

The integration of LLMs is available for GraphScope versions 0.25 and later, and it uses langchain for prompts. Therefore, to begin, please make sure you have the following environment:

python>=3.8

graphscope>=0.25.0

pandas==2.0.3

langchain>=0.0.316

We strongly recommend creating a clean Python virtual environment for GraphScope and those dependencies. If you are unsure how to do this, you can follow these instructions.

1. Download Datasets¶

In this document, we take the graph of Dream of the Red Chamber as the example. You can download the dataset by directly git clone the repository of the dataset:

git clone https://github.com/Suchun-sv/The-Dream-of-the-Red-Chamber.git

Or you can visit the Git Repository, select the Download ZIP button to download the dataset, and then unzip it.

Finally, you should move the dataset to the directory where you run your python file.

# unzip The-Dream-of-the-Red-Chamber.zip # if you download the zip file

# move the dataset to the directory where you run your python file

mv /path/to/The-Dream-of-the-Red-Chamber/data ./data

2. Load the Graph¶

After preparing the dataset, use the following python code to have the GIE load the datasets and build the graph.

import graphscope as gs

import pandas as pd

gs.set_option()

sess = gs.session(cluster_type='hosts')

graph = sess.g()

nodes_sets = pd.read_csv("./data/stone_story_nodes_relation.csv", sep=",")

graph = graph.add_vertices(nodes_sets, label="Person", vid_field="id")

edges_sets = pd.read_csv("./data/stone_story_edges.csv")

for edge_label in edges_sets['label'].unique():

edges_sets_ = edges_sets[edges_sets['label'] == edge_label]

graph = graph.add_edges(edges_sets_, src_field="head", dst_field="tail", label=edge_label)

print(graph.schema)

If you see the following output in your terminal or console, it suggests that the dataset has been successfully loaded into GIE.

Properties: Property(0, eid, LONG, False, ), Property(1, label, STRING, False, )

Comment: Relations: [Relation(source='Person', destination='Person')]

type: EDGE

Label: daughter_in_law_of_grandson_of

Properties: Property(0, eid, LONG, False, ), Property(1, label, STRING, False, )

Comment: Relations: [Relation(source='Person', destination='Person')]

type: EDGE

Label: wife_of

Properties: Property(0, eid, LONG, False, ), Property(1, label, STRING, False, )

Comment: Relations: [Relation(source='Person', destination='Person')]

type: EDGE

...

3. Set Endpoint and API Key¶

Since GIE’s LLM assistant module uses OpenAI’s API, you should set your endpoint and API key before using it:

endpoint = "https://xxx" # use your endpoint

api_key = "xxx" # replace to your own api key

4. Generate Graph Query Sentence from Questions¶

In GIE’s LLM assistant module, the query_to_cypher function allows you to generate corresponding query sentences from provided questions.

from graphscope.langchain_prompt.query import query_to_cypher

Simply define your question and pass it to query_to_cypher. It will generate the corresponding Cypher queries based on the question and the information of the loaded graph. Here’s an example of the LLM assistant generating Cypher queries for the question, ‘Whose son is Baoyu Jia?”

from graphscope.langchain_prompt.query import query_to_cypher

question = "贾宝玉是谁的儿子?"

cypher_sentence = query_to_cypher(graph, question, endpoint=endpoint, api_key=api_key)

print(cypher_sentence)

The query sentence would be like:

MATCH (p:Person)-[:son_of]->(q:Person)

WHERE p.name = '贾宝玉'

RETURN q.name

Please note that the generated query sentence may not be 100% correct, and you have the option to edit the query sentence as needed.

5. Execute Generated Query Sentence with GIE¶

Lastly, you can execute the generated Cypher query in the built-in GIE interactive sessions. Here is an example.

# Start the GIE interactive session

g = gs.interactive(graph, params={'neo4j.bolt.server.disabled': 'false', 'neo4j.bolt.server.port': 7687})

# Submit the query sentence

q1 = g.execute(cypher_sentence, lang="cypher")

# Check the query results

print(q1.records)

Here the output would be “贾政”, which is accurate according to the story of Dream of the Red Chamber.